NicTool Enterprise Implementation

Implementation Notes

There were many items of interest learned during the implementation of this project. I shall briefly cover a few of them and what was learned from them.

Query Load:

Frankly, I didn't know what to expect during the implementation so I planned for the worst. I had tested tinydns, BIND 8, and BIND 9 and knew that tinydns could serve queries an order of magnitude faster than BIND. I also knew that our BIND servers were weak kneed and constantly running at their limits. We'd have 250,000 zones between the "big three" legacy systems and determining exactly what the query load for authoritative queries on them was next to impossible. So I guesssed on the long side.

It turned out that even a year and several more acquisitions later, we had more than 400,000 zones loaded and any one DNS server in any cluster could easily handle the load for the entire cluster without breaking a sweat. Of course, I had also designed the entire system so that should two DNS clusters drop off the internet, the remaining cluster could handle the query load for the missing clusters. We never tested this but have good reason to believe this design goal was met.

Redundancy

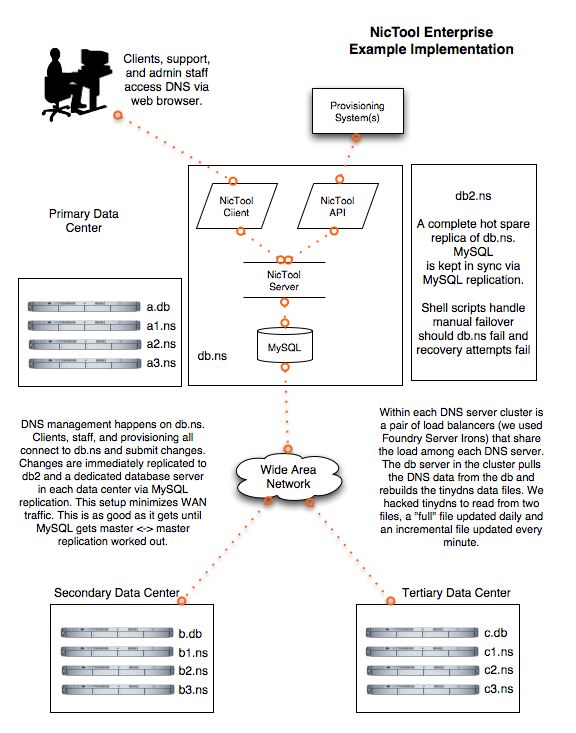

For the purposes of DNS, we cared about redundancy at two levels. The first was at the server level which was solved by using hardware load balancers. We used Foundry ServerIrons to share the load among the three DNS servers in each cluster, monitor system health, and fail servers that weren't behaving. We also looked at redundancy at the network layer. We scaled each cluster to be able to support the query load for all three clusters. If the network to a data center fails, you simply wait until it's fixed.

Performance

The DNS servers used for this implementation were all mediocre systems for their time (2001), dual PIII 600 or 650 MHz cpu's with 512MB of RAM. They were chosen principally because they were available. As tinydns is remarkably efficient, the systems were plenty adequate then and are most likely still in use today (3 years later).

The database servers were dual 1GHz systems with 1GB RAM each, RAID disks (two RAID 1 volumes). The CPU needs for typical database operations were minimal but during database dumps (backups, integrity tests, etc) it was useful to have all the CPU you can possibly get. These systems were high end then and handled their tasks quite admirably. I'm sure they too are still in service, much the way I left them.

ABOUT

ABOUT